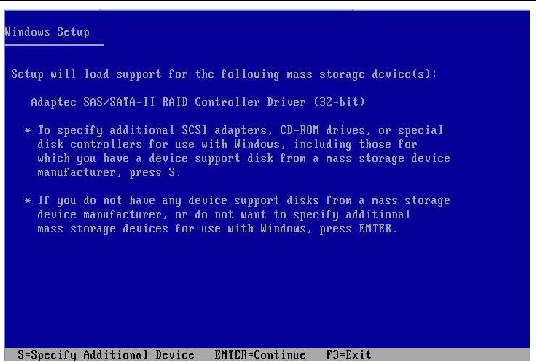

Oracle VM VirtualBox can emulate the most common types of hard disk controllers typically found in computing devices: IDE, SATA (AHCI), SCSI, SAS, USB-based, and NVMe mass storage devices. IDE (ATA) controllers are a backwards-compatible yet very advanced extension of the disk controller in the IBM PC/AT (1984). I de-selected the 73GB SCSI internal hard drive since this disk will be used exclusively in the next section to create a single 'Volume Group' that will be used for all iSCSI based shared disk storage requirements for Oracle Clusterware and Oracle RAC.

Install Oracle 10g Release 2 RAC using SCSI

Introduction

We will be installing Oracle 10g Release 2 RAC on two desktop computers using external SCSI as shared storage and will be using raw devices for the OCR and Voting Disk and ASM for the database files.

Linux Operating System specific requirements

Install Linux OS system with default options (or you can choose everything) on both the nodes.

Once the RHEL5 has been installed, Please make sure, you have the following RPMs, if not, install them on both nodes.

(Metalink Note 169706.1 has a list of RPMs required for Oracle Software Installation).

For our 10G RAC Setup, we must have the following RPMs. All of these RPMs are available on the RHEL5 media. (If you select the defaults while installing RHEL5, it installs everything except the following)

# rpm -Uvh compat-gcc-34-3.4.6-4.i386.rpm

# rpm -Uvh compat-db-4.2.52-5.1.i386.rpm

# rpm -Uvh compat-gcc-34-c++-3.4.6-4.i386.rpm

# rpm -Uvh libXp-1.0.0-8.1.el5.i386.rpm

# rpm -Uvh libstdc++43-devel-4.3.2-7.el5.i386.rpm

# rpm -Uvh libaio-devel-0.3.106-3.2.i386.rpm

# rpm -Uvh sysstat-7.0.2-3.el5.i386.rpm

# rpm -Uvh unixODBC-2.2.11-7.1.i386.rpm

# rpm -Uvh unixODBC-devel-2.2.11-7.1.i386.rpm

Download and Install Oracle ASMLib

Download the required RPMs from the following link

Search for “Intel IA32 (x86) Architecture” and download and install the following RPMs

• oracleasm-support-2.1.3-1.el5

• oracleasmlib-2.0.4-1.el5

• oracleasm-2.6.18-128.el5-2.0.5-1.el5

Login as ROOT

# rpm -Uvh oracleasm-support-2.1.3-1.el5.i386.rpm

# rpm -Uvh oracleasm-2.6.18-128.el5-2.0.5-1.el5.i686.rpm

# rpm -Uvh oracleasmlib-2.0.4-1.el5.i386.rpm

CVU

Here are the steps to install cvuqdisk package (required to run ‘cluvfy’ utility)

Download the latest version from the following link

http://www.oracle.com/technology/products/database/clustering/cvu/cvu_download_homepage.html

As ORACLE user, unzip the downloaded file (cvupack_Linux_x86.zip) to a location from where you would like to run the ‘cluvfy’ utility. (for example /u01/app/oracle)

Copy the rpm (cvuqdisk-1.0.1-1.rpm or the latest version) to a local directory. You can find the rpm in /rpm directory where is the directory in which you have installed the CVU.

Set the environment variable to a group, who should own this binary, typically it is the “dba” group.

As ROOT user

# export CVUQDISK_GRP=dba

Erase any existing package

# rpm -e cvuqdisk

Install the rpm

# rpm -iv cvuqdisk-1.0.1-1.rpm

Verify the package

# rpm -qa | grep cvuqdisk

Disable SELinux (Metalink Note: 457458.1)

1. Editing /etc/selinux/config

• Change the SELINUX value to “SELINUX=disabled”.

• Reboot the server

To check the status of SELinux, issue:

# /usr/sbin/sestatus

SCP and SSH Location

Install requires scp and ssh to be located in the path /usr/local/bin on the RedHat Linux platform.

If scp and ssh are not in this location, then create a symbolic link in /usr/local/bin to

the location where scp and ssh are found.

# ln -s /usr/bin/scp /usr/local/bin/scp

# ln -s /usr/bin/ssh /usr/local/bin/ssh

Configure /etc/hosts

Make sure both the nodes have the same /etc/hosts file.

# Do not remove the following line, or various programs

# that require network functionality will fail.

127.0.0.1 localhost.localdomain localhost

192.168.0.101 rklx1.world.com rklx1 # Public Network on eth0

192.168.0.102 rklx1-vip.world.com rklx1-vip # VIP on eth0

10.10.10.1 rklx1-priv.world.com rklx1-priv # Private InterConnect on eth1

192.168.0.105 rklx2.world.com rklx2 # Public Network on eth0

192.168.0.106 rklx2-vip.world.com rklx2-vip # VIP on eth0

10.10.10.2 rklx2-priv.world.com rklx2-priv # Private InterConnect on eth1

Verify Memory and CPU Information

# grep MemTotal /proc/meminfo

# grep “model name” /proc/cpuinfo

Confirm if you are running 32 bit or 64 bit OS

# getconf LONG_BIT

Find the interface names

# /sbin/ifconfig –a

# ping ${NODE1}.${DOMAIN}

# ping ${NODE1}

# ping ${NODE1}-priv.${DOMAIN}

# ping ${NODE1}-priv

Configure the Network

Make sure files ‘ifcfg-eth0’ and ‘ifcfg-eth1’ on both nodes have the IPADDR, NETMASK, TYPE and BOOTPROTO set correctly (by default they are NOT)

NODE 1

[root@rklx1 network-scripts]# pwd

/etc/sysconfig/network-scripts

[root@rklx1 network-scripts]# cat ifcfg-eth0

# Intel Corporation 82801G (ICH7 Family) LAN Controller

DEVICE=eth0

BOOTPROTO=static

IPADDR=192.168.0.101

NETMASK=255.255.255.0

HWADDR=55:19:12:DD:E6:12

ONBOOT=yes

TYPE=Ethernet

[root@rklx1 network-scripts]# cat ifcfg-eth1

# ADMtek NC100 Network Everywhere Fast Ethernet 10/100

DEVICE=eth1

BOOTPROTO=static

IPADDR=10.10.10.1

HWADDR=55:24:KK:14:15:KO

ONBOOT=yes

TYPE=Ethernet

[root@rklx1 network-scripts]#

NODE 2

[root@rklx2 network-scripts]# pwd

/etc/sysconfig/network-scripts

[root@rklx2 network-scripts]# cat ifcfg-eth0

# Intel Corporation 82562ET/EZ/GT/GZ – PRO/100 VE (LOM) Ethernet Controller

DEVICE=eth0

BOOTPROTO=static

IPADDR=192.168.0.105

NETMASK=255.255.255.0

HWADDR=00:12:70:0L:24:2H

ONBOOT=yes

TYPE=Ethernet

[root@rklx2 network-scripts]# cat ifcfg-eth1

# ADMtek NC100 Network Everywhere Fast Ethernet 10/100

DEVICE=eth1

BOOTPROTO=static

IPADDR=10.10.10.2

HWADDR=00:56:DJ:18:17:FF

ONBOOT=yes

TYPE=Ethernet

Create the Groups and User Account

Create the user accounts and assign groups on all nodes. We will create a group ‘dba’ and user ‘oracle’.

(I prefer to use the OS GUI to create the accounts, you can use the below commands to create)

# /usr/sbin/groupadd –g 510 dba

# /usr/sbin/useradd “Oracle User” –u 520 -m /home/oracle -g dba oracle

# id oracle

uid=520(oracle) gid=510(dba) groups=510(dba)

The User ID and Group IDs must be the same on all cluster nodes.

# passwd oracle

Changing password for user oracle.

New password:

Retype new password:

passwd: all authentication tokens updated successfully.

Create Mount Points

Now create mount points where oracle software will be installed on both nodes.

Login as ROOT

# mkdir -p /u01/app/oracle

# chown -R oracle:dba /u01/app/oracle

# chmod -R 775 /u01/app/oracle

Configure Kernel Parameters

Login as ROOT and configure the Linux kernel parameters on both nodes.

cat >> /etc/sysctl.conf << EOF kernel.shmall = 2097152 kernel.shmmax = 536870912 kernel.shmmni = 4096 kernel.sem = 250 32000 100 128 fs.file-max = 658576 net.ipv4.ip_local_port_range = 1024 65000 net.core.rmem_default = 262144 net.core.wmem_default = 262144 net.core.rmem_max = 16777216 net.core.wmem_max = 1048536 EOF /sbin/sysctl -p Setting Shell Limits for the oracle User Oracle recommends setting the limits to the number of processes and number of open files each Linux account may use. Login as ROOT cat >> /etc/security/limits.conf << EOF oracle soft nproc 2047 oracle hard nproc 16384 oracle soft nofile 1024 oracle hard nofile 65536 EOF cat >> /etc/pam.d/login << EOF session required /lib/security/pam_limits.so EOF cat >> /etc/profile << EOF if [ $USER = “oracle” ]; then if [ $SHELL = “/bin/ksh” ]; then ulimit -u 16384 ulimit -n 65536 else ulimit -u 16384 -n 65536 fi umask 022 fi EOF cat >> /etc/csh.login << EOF if ( $USER “oracle” ) then limit maxproc 16384 limit descriptors 65536 umask 022 endif EOF Configure the Hangcheck Timer Login as ROOT modprobe hangcheck-timer hangcheck_tick=30 hangcheck_margin=180 cat >> /etc/rc.d/rc.local << EOF modprobe hangcheck-timer hangcheck_tick=30 hangcheck_margin=180 EOF Configure SSH for User Equivalence During the installation of Oracle RAC 10gR2, OUI needs to copy files to and execute programs on the other nodes in the cluster. In order for OUI to do that, you must configure SSH to allow user equivalence. Establishing user equivalence with SSH provides a secure means of copying files and executing programs on other nodes in the cluster without requiring password prompts. The first step is to generate public and private keys for SSH. There are two versions of the SSH protocol; version 1 uses RSA and version 2 uses DSA, so we will create both types of keys to ensure that SSH can use either version. The ssh-keygen program will generate public and private keys of either type depending upon the parameters passed to it. When you run ssh-keygen, you will be prompted for a location to save the keys. Accept default by pressing Enter . You will then be asked for a passphrase. Enter a password and then enter it again to confirm. Once done, you will have four files in the ~/.ssh directory: id_rsa, id_rsa.pub, id_dsa, and id_dsa.pub. The id_rsa and id_dsa files are your private keys and must not be shared with anyone. The id_rsa.pub and id_dsa.pub files are your public keys and must be copied to each of the other nodes in the cluster. Login as ORACLE for all the actions below for ssh setup Do the following on both nodes $ mkdir ~/.ssh $ chmod 755 ~/.ssh $ /usr/bin/ssh-keygen -t rsa Generating public/private rsa key pair. Enter file in which to save the key (/home/oracle/.ssh/id_rsa): Enter passphrase (empty for no passphrase): Enter same passphrase again: Your identification has been saved in /home/oracle/.ssh/id_rsa. Your public key has been saved in /home/oracle/.ssh/id_rsa.pub. The key fingerprint is: 7c:eg:72:71:82:da:61:ad:c2:f2:0d:h6:kf:20:fc:27 oracle@rklx1.world.com $ /usr/bin/ssh-keygen -t dsa Generating public/private dsa key pair. Enter file in which to save the key (/home/oracle/.ssh/id_dsa): Enter passphrase (empty for no passphrase): Enter same passphrase again: Your identification has been saved in /home/oracle/.ssh/id_dsa. Your public key has been saved in /home/oracle/.ssh/id_dsa.pub. The key fingerprint is: 7c:eg:72:71:82:da:61:ad:c2:f2:0d:h6:kf:20:fc:27 oracle@rklx1.world.com Now the contents of the public key files id_rsa.pub and id_dsa.pub on each node must be copied to the ~/.ssh/authorized_keys file on every other node. Use ssh to copy the contents of each file to the ~/.ssh/authorized_keys file. Note that the first time you access a remote node with ssh its RSA key will be unknown and you will be prompted to confirm that you wish to connect to the node and you type ‘yes’. SSH will record the RSA key for the remote nodes and will not prompt for this on subsequent connections to that node. From the NODE 1, (copy the local account’s keys so that ssh to the local node will work): $ cat ~/.ssh/id_rsa.pub >> ~/.ssh/authorized_keys

$ cat ~/.ssh/id_dsa.pub >> ~/.ssh/authorized_keys

$ ssh oracle@rklx2 cat ~/.ssh/id_rsa.pub >> ~/.ssh/authorized_keys

The authenticity of host ‘rklx2 (192.168.0.105)’ can’t be established.

RSA key fingerprint is d1:23:a7:ef:c1:gc:2e:19:h2:13:30:59:65:h8:1b:71.

Are you sure you want to continue connecting (yes/no)? yes

Warning: Permanently added ‘rklx2,192.168.0.105’ (RSA) to the list of known hosts.

oracle@rklx2’s password:

$ ssh oracle@rklx2 cat ~/.ssh/id_dsa.pub >> ~/.ssh/authorized_keys

oracle@rklx2’s password:

$ chmod 644 ~/.ssh/authorized_keys

From the NODE 2. Notice that this time SSH will prompt for the passphrase you used when creating the keys rather than the oracle password. This is because the first node (rklx1) now knows the public keys for the second node and SSH is now using a different authentication protocol. Note, if you didn’t enter a passphrase when creating the keys with ssh-keygen, you will not be prompted for one here.

$ cat ~/.ssh/id_rsa.pub >> ~/.ssh/authorized_keys

$ cat ~/.ssh/id_dsa.pub >> ~/.ssh/authorized_keys

$ ssh oracle@rklx1 cat ~/.ssh/id_rsa.pub >> ~/.ssh/authorized_keys

The authenticity of host ‘rklx1 (192.168.0.101)’ can’t be established.

RSA key fingerprint is bd:0e:39:2a:23:2d:ca:f9:ea:71:f5:3d:d3:dd:3b:65.

Are you sure you want to continue connecting (yes/no)? yes

Warning: Permanently added ‘rklx1,192.168.0.101’ (RSA) to the list of known hosts.

Enter passphrase for key ‘/home/oracle/.ssh/id_rsa’:

$ ssh oracle@rklx1 cat ~/.ssh/id_dsa.pub >> ~/.ssh/authorized_keys

Enter passphrase for key ‘/home/oracle/.ssh/id_rsa’:

$ chmod 644 ~/.ssh/authorized_keys

Establish User Equivalence

Finally, after all of the generating of keys, copying of files, and repeatedly entering passwords and passphrases , you’re ready to establish user equivalence. Once user equivalence is established, you will not be prompted for password again.

As oracle on the node where the Oracle 10gR2 software will be installed (host: rklx1): User Equivalence is established for the current session only

$ exec /usr/bin/ssh-agent $SHELL

$ /usr/bin/ssh-add

Enter passphrase for /home/oracle/.ssh/id_rsa:

Identity added: /home/oracle/.ssh/id_rsa (/home/oracle/.ssh/id_rsa)

Identity added: /home/oracle/.ssh/id_dsa (/home/oracle/.ssh/id_dsa)

Test Connectivity

Once you are done with the above steps, you should be able to ssh without being asked for password to any node in the cluster (NODE1 and NODE2 in our case).

You MUST test connectivity in each direction from all servers (to itself and other servers in the cluster) otherwise your installation of CRS will fail.

$ ssh rklx1 date

$ ssh rklx1.world.com date

$ ssh rklx1-priv date

$ ssh rklx1-priv.world.com date

$ ssh rklx2 date

$ ssh rklx2.world.com date

$ ssh rklx2-priv date

$ ssh rklx2-priv.world.com date

Answer ‘yes’ when you see this message and it will occur only the first time an operation on a remote node is performed, by testing the connectivity, you not only ensure that remote operations work properly, you also complete the initial security key exchange.

The authenticity of host ‘rklx2 (192.168.0.101)’ can’t be established.

RSA key fingerprint is df:d3:52:16:df:2f:51:d5:df:gg:6a:aa:4b:43:a6:a5.

Are you sure you want to continue connecting (yes/no)? yes

MAKE SURE VIP ip’s ARE NOT PLUMBED.

Login as ROOT

# ping rklx1-vip

# ping rklx2-vip

Example Output:

PING rklx1-vip.world.com (192.168.0.102) 56(84) bytes of data.

From rklx1.world.com (192.168.0.101) icmp_seq=1 Destination Host Unreachable

How to create partitions on SCSI Disk

Purpose: Creating RAW partitions for OCR and Voting Disk and

one big raw partition for ASM

Available Hardware: External SCSI Disk – size 147 GB

Create two 128MB RAW partitions for OCR (from Primary Partition)

and three 128MB RAW partitions for Voting Disk (from Extended Partition)

and leave the rest for ASM (from Extended Partition)

You need to run the following from NODE 1 only

[root@rklx1]# fdisk -l

Disk /dev/sdb: 147.0 GB, 147086327808 bytes

255 heads, 63 sectors/track, 17882 cylinders

Units = cylinders of 16065 * 512 = 8225280 bytes

Device Boot Start End Blocks Id System

/dev/sdb1 * 1 1217 9775521 83 Linux

/dev/sdb2 1218 7297 48837600 83 Linux

/dev/sdb3 7298 17882 85024012+ 83 Linux

[root@rklx1]# fdisk /dev/sdb

The number of cylinders for this disk is set to 17882.

There is nothing wrong with that, but this is larger than 1024,

and could in certain setups cause problems with:

1) software that runs at boot time (e.g., old versions of LILO)

2) booting and partitioning software from other OSs

(e.g., DOS FDISK, OS/2 FDISK)

If there are any existing partitions, Delete all the existing partitions

Command (m for help): d

Partition number (1-4): 1

Command (m for help): d

Partition number (1-4): 2

Command (m for help): d

Selected partition 3

Command (m for help): p

Disk /dev/sdb: 147.0 GB, 147086327808 bytes

255 heads, 63 sectors/track, 17882 cylinders

Units = cylinders of 16065 * 512 = 8225280 bytes

Device Boot Start End Blocks Id System

We will create two small primary partitions for OCR and one extended partition, then from extended partition, we will create three small partitions (128M) for the voting disk and will leave the rest for the ASM.

Command (m for help): n

Command action

e extended

p primary partition (1-4)

p

Partition number (1-4): 1

First cylinder (1-17882, default 1):

Using default value 1

Last cylinder or +size or +sizeM or +sizeK (1-17882, default 17882): +128M

Command (m for help): p

Disk /dev/sdb: 147.0 GB, 147086327808 bytes

255 heads, 63 sectors/track, 17882 cylinders

Units = cylinders of 16065 * 512 = 8225280 bytes

Device Boot Start End Blocks Id System

/dev/sdb1 1 17 136521 83 Linux

Command (m for help): p

Disk /dev/sdb: 147.0 GB, 147086327808 bytes

255 heads, 63 sectors/track, 17882 cylinders

Units = cylinders of 16065 * 512 = 8225280 bytes

Device Boot Start End Blocks Id System

/dev/sdb1 1 17 136521 83 Linux

Command (m for help): n

Command action

e extended

p primary partition (1-4)

p

Partition number (1-4): 2

First cylinder (18-17882, default 18):

Using default value 18

Last cylinder or +size or +sizeM or +sizeK (18-17882, default 17882): +128M

Command (m for help): p

Disk /dev/sdb: 147.0 GB, 147086327808 bytes

255 heads, 63 sectors/track, 17882 cylinders

Units = cylinders of 16065 * 512 = 8225280 bytes

Device Boot Start End Blocks Id System

/dev/sdb1 1 17 136521 83 Linux

/dev/sdb2 18 34 136552+ 83 Linux

Command (m for help): n

Command action

e extended

p primary partition (1-4)

e

Partition number (1-4): 3

First cylinder (35-17882, default 35):

Using default value 35

Last cylinder or +size or +sizeM or +sizeK (35-17882, default 17882):

Using default value 17882

Command (m for help): p

Disk /dev/sdb: 147.0 GB, 147086327808 bytes

255 heads, 63 sectors/track, 17882 cylinders

Units = cylinders of 16065 * 512 = 8225280 bytes

Device Boot Start End Blocks Id System

/dev/sdb1 1 17 136521 83 Linux

/dev/sdb2 18 34 136552+ 83 Linux

/dev/sdb3 35 17882 143364060 5 Extended

Command (m for help): n

Command action

l logical (5 or over)

p primary partition (1-4)

l

First cylinder (35-17882, default 35):

Using default value 35

Last cylinder or +size or +sizeM or +sizeK (35-17882, default 17882): +128M

Command (m for help): p

Disk /dev/sdb: 147.0 GB, 147086327808 bytes

255 heads, 63 sectors/track, 17882 cylinders

Units = cylinders of 16065 * 512 = 8225280 bytes

Device Boot Start End Blocks Id System

/dev/sdb1 1 17 136521 83 Linux

/dev/sdb2 18 34 136552+ 83 Linux

/dev/sdb3 35 17882 143364060 5 Extended

/dev/sdb5 35 51 136521 83 Linux

Command (m for help): n

Command action

l logical (5 or over)

p primary partition (1-4)

l

First cylinder (52-17882, default 52):

Using default value 52

Last cylinder or +size or +sizeM or +sizeK (52-17882, default 17882): +128M

Command (m for help): n

Command action

l logical (5 or over)

p primary partition (1-4)

l

First cylinder (69-17882, default 69):

Using default value 69

Last cylinder or +size or +sizeM or +sizeK (69-17882, default 17882): +128M

Command (m for help): p

Disk /dev/sdb: 147.0 GB, 147086327808 bytes

255 heads, 63 sectors/track, 17882 cylinders

Units = cylinders of 16065 * 512 = 8225280 bytes

Device Boot Start End Blocks Id System

/dev/sdb1 1 17 136521 83 Linux

/dev/sdb2 18 34 136552+ 83 Linux

/dev/sdb3 35 17882 143364060 5 Extended

/dev/sdb5 35 51 136521 83 Linux

/dev/sdb6 52 68 136521 83 Linux

/dev/sdb7 69 85 136521 83 Linux

Command (m for help): n

Command action

l logical (5 or over)

p primary partition (1-4)

l

First cylinder (86-17882, default 86):

Using default value 86

Last cylinder or +size or +sizeM or +sizeK (86-17882, default 17882):

Using default value 17882

Command (m for help): p

Disk /dev/sdb: 147.0 GB, 147086327808 bytes

255 heads, 63 sectors/track, 17882 cylinders

Units = cylinders of 16065 * 512 = 8225280 bytes

Device Boot Start End Blocks Id System

/dev/sdb1 1 17 136521 83 Linux

/dev/sdb2 18 34 136552+ 83 Linux

/dev/sdb3 35 17882 143364060 5 Extended

/dev/sdb5 35 51 136521 83 Linux

/dev/sdb6 52 68 136521 83 Linux

/dev/sdb7 69 85 136521 83 Linux

/dev/sdb8 86 17882 142954371 83 Linux

Command (m for help): w

The partition table has been altered!

Calling ioctl() to re-read partition table.

Syncing disks.

RedHat 5 only Instead of create a rawdevice file, the udev system is used by using /etc/udev/rules.d/60-raw.rules.

{you need to add this on all nodes in the cluster}

NOTE: Make sure to replace b with your defined device. I have one local hard drive on node 1 (rklx1) and two local hard drives on node 2 (rklx2) and one external SCSI, so in my case SCSI shows up as /dev/sdb on node 1 and as /dev/sdc on node 2. So going by this example you have to replace sdbx with sbcx on node 2

vi /etc/udev/rules.d/60-raw.rules and add the following lines

ACTION”add”, KERNEL”sdb1″, RUN+=”/bin/raw /dev/raw/raw1 %N”

ACTION”add”, KERNEL”sdb2″, RUN+=”/bin/raw /dev/raw/raw2 %N”

ACTION”add”, KERNEL”sdb5″, RUN+=”/bin/raw /dev/raw/raw3 %N”

ACTION”add”, KERNEL”sdb6″, RUN+=”/bin/raw /dev/raw/raw4 %N”

ACTION”add”, KERNEL”sdb7″, RUN+=”/bin/raw /dev/raw/raw5 %N”

KERNEL”raw[1]*”, OWNER=”oracle”, GROUP=”dba”, MODE=”660″

KERNEL”raw[2-5]*”, OWNER=”oracle”, GROUP=”dba”, MODE=”660″

The first part will create the raw devices, the second will set the correct ownership and permissions.

Run start_udev to create the devices from the definitions:

# /sbin/start_udev

[root@rklx1]# fdisk -l

Disk /dev/sdb: 147.0 GB, 147086327808 bytes

255 heads, 63 sectors/track, 17882 cylinders

Units = cylinders of 16065 * 512 = 8225280 bytes

Device Boot Start End Blocks Id System

/dev/sdb1 1 17 136521 83 Linux => /dev/raw/raw1: OCR

/dev/sdb2 18 34 136552+ 83 Linux => /dev/raw/raw2: OCR

/dev/sdb3 35 17882 143364060 5 Extended

/dev/sdb5 35 51 136521 83 Linux => /dev/raw/raw3: V Disk

/dev/sdb6 52 68 136521 83 Linux => /dev/raw/raw3: V Disk

/dev/sdb7 69 85 136521 83 Linux => /dev/raw/raw3: V Disk

/dev/sdb8 86 17882 142954371 83 Linux => ASM – Data files etc.

Configuring and Loading ASM

To load the ASM driver oracleams.o and to mount the ASM driver filesystem, enter:

Login as ROOT

# /etc/init.d/oracleasm configure

Configuring the Oracle ASM library driver.

This will configure the on-boot properties of the Oracle ASM library

driver. The following questions will determine whether the driver is

loaded on boot and what permissions it will have. The current values

will be shown in brackets (‘[]’). Hitting without typing an

answer will keep that current value. Ctrl-C will abort.

Default user to own the driver interface []: oracle

Default group to own the driver interface []: oinstall

Start Oracle ASM library driver on boot (y/n) [n]: y

Fix permissions of Oracle ASM disks on boot (y/n) [y]: y

Writing Oracle ASM library driver configuration [ OK ]

Creating /dev/oracleasm mount point [ OK ]

Loading module “oracleasm” [ OK ]

Mounting ASMlib driver filesystem [ OK ]

Scanning system for ASM disks [ OK ]

#

Creating ASM Disks

NOTE: Creating ASM disks is done on one RAC node 1 The following commands should only be executed on one RAC node (NODE 1)

I executed the following commands to create my ASM disks: (make sure to change the device names)

(In this example I used partitions (/dev/sdb8)

Login as ROOT

# /etc/init.d/oracleasm createdisk DISK1 /dev/sdb8

Marking disk “/dev/sdb8” as an ASM disk [ OK ]

# # Replace “sdb8” with the name of your device. I used /dev/sdb8

To list all ASM disks, enter:

# /etc/init.d/oracleasm listdisks

DISK1

On all other RAC nodes, you just need to notify the system about the new ASM disks:

Login as ROOT

# /etc/init.d/oracleasm scandisks

Scanning system for ASM disks [ OK ]

# /etc/init.d/oracleasm listdisks

# DISK1

RESTART THE NETWORK

Execute the following commands as root to reflect the changes.

# service network stop

# service network start

Run the cluvfy utility to verify all pre-requisites are in place

I will be using the “-osdba dba and -orainv dba” switches to suppress OS group ‘oinstall’ errors, as I have not created the ‘oinstall’ group. I prefer to keep just one group ‘DBA’ for the user ‘oracle’

After you have configured the hardware and operating systems on the new nodes, you can use this command to verify node reachability. Run the CLUVFY command to verify your installation at the post hardware installation stage.

$ ./cluvfy stage -post hwos -n rklx1,rklx2

Run the following CLUVFY to check system requirements for installing Oracle Clusterware.

It verifies the following

• User Equivalence: User equivalence exists on all the specified nodes

• Node Reachability: All the specified nodes are reachable from the local node

• Node Connectivity: Connectivity exists between all the specified nodes through the public and private network interconnections

• Administrative Privileges: The oracle user has proper administrative privileges to install Oracle Clusterware on the specified nodes

• Shared Storage Accessibility: If specified, the Oracle Cluster Registry (OCR) device and voting disk are shared across all the specified nodes

• System Requirements: All system requirements are met for installing Oracle Clusterware software, including kernel version, kernel parameters, software packages, memory, swap directory space, temp directory space, and required users and groups

• Node Applications: VIP, ONS, and GSD node applications are created for all the nodes

./cluvfy stage -pre crsinst -osdba dba -orainv dba –r 10gR2 -n rklx1,rklx2

Run the following to perform a pre-installation check for an Oracle Database with Oracle RAC installation

./cluvfy stage -pre dbinst -n rklx1,rklx2 -r 10gR2 -osdba dba –verbose

Run the CLUVFY command to obtain a detailed comparison of the properties of the reference node with all of the other nodes that are part of your current cluster environment

./cluvfy comp peer -n rklx1,rklx2 -osdba dba -orainv dba

To verify the accessibility of the cluster nodes from the local node or from any other cluster node, use the component verification command nodereach as follows:

./cluvfy comp nodereach -n rklx1,rklx2

To verify that the other cluster nodes can be reached from the local node through all the available network interfaces or through specific network interfaces, use the component verification command nodecon as follows:

./cluvfy comp nodecon -n all

Install the Oracle Clusterware

Login as ORACLE

$ unzip 10201_clusterware_linux32.zip (for example to /u01/software)

$ export ORACLE_BASE=/u01/app/oracle

I will be installing the software in the following directories, you can follow the exact OFA structure if you want.

Oracle Clusteware: /u01/app/oracle/product/crs

Oracle ASM: /u01/app/oracle/product/asm

Oracle Database: /u01/app/oracle/product/10.2.0

Make sure you can see the ‘xclock’. I login as ROOT and then do +xhost and then su to oracle and it works.

rklx1:/u01/software/clusterware $ ./runInstaller –ignoreSysPrereqs

Click Next at the Welcome Screen

Accept Default for the Inventory Location, so click next

Drivers qbex laptops & desktops. You can specify the location where you want to install CRS and click Next

Click Next at the ‘Product-Specific Prerequisite Checks’

‘Specify Cluster Configuration’ screen shows only the RAC Node 1. Click the “Add” button to continue.

Now input the Node 2 details and click OK

Make sure Public, Private and VIP information for both the nodes are correct and click Next

By default, both the Interface Type shows as Private, Click the Edit button to change the ‘eth0’ to Public

Specify the OCR Location, in our case it is /dev/raw/raw1 and /dev/raw/raw2

Specify the Voting Disk Location, in our case it is /dev/raw/raw3 , /dev/raw/raw4 and /dev/raw/raw5

Run the orainstRoot.sh file first on NODE 1 and then on NODE 2 and STOP

BEFORE YOU RUN ROOT.SH, Make the following changes in srvctl and vipca under $CRS_HOME/bin on both the nodes.

# Edit the vipca and srvctl commands to add the “unset LD_ASSUME_KERNEL”

# Documented as workaround in Metalink Note 414163.1

Edit the vipca file under $CRS_HOME/bin

if [ “$arch” = “i686” -o “$arch” = “ia64” -o “$arch” = “x86_64” ]

then

LD_ASSUME_KERNEL=2.4.19

export LD_ASSUME_KERNEL

fi

unset LD_ASSUME_KERNEL ## << Line to be added

Edit the srvctl file under $CRS_HOME/bin

LD_ASSUME_KERNEL=2.4.19

export LD_ASSUME_KERNEL

unset LD_ASSUME_KERNEL ## << Line to be added

Login to NODE 2 as ROOT and run the vipca

# cd /u01/app/oracle/product/crs/bin

# ./vipca

Click Next on the first screen

Make sure you highlight eth0 (if there are more than one shows up) and click Next

On the Next Screen you need to enter the IP Alias Name and it would automatically shows the IP Address but make sure to verify it is correct. Enter the IP Alias Name for both the nodes and Click Next.

Click ‘Finish’ at the Summary Screen

At this point, VIPCA is configured.

NOW run root.sh on NODE 1 and let it finish before you run root.sh on NODE 2

Now Come Back to NODE 1 and click ‘OK’ on the ‘Execute Configuration scripts’ screen

Clusterware Installation is complete, now lets install Oracle ASM

rklx1:/u01/software/database $ ./runInstaller –ignoreSysPrereqs

On Select Installation Type screen select Enterprise Edition

On Specify Home Details, enter the value where you want to install ASM

Check on both nodes for cluster installation mode

I see warning in the above as I have not created the ‘oinstall’ group and you can ignore this warning.

Select ‘Configure Automatic Storage Management (ASM)’

Select External and check mark the disk(s) shown

Click Install

BEFORE YOU RUN ROOT.SH, Make the following changes in srvctl under $ASM_HOME/bin on both nodes.

# Edit the vipca and srvctl commands to add the “unset LD_ASSUME_KERNEL”

# Documented as workaround in Metalink Note 414163.1

Run root.sh on NODE 1 and let it finish before you run root.sh on NODE 2

Now Install the Oracle Database

rklx1:/u01/software/database $ ./runInstaller –ignoreSysPrereqs

BEFORE YOU RUN ROOT.SH, Make the following changes in srvctl under $ORACLE_HOME/bin on both nodes.

# Edit the vipca and srvctl commands to add the “unset LD_ASSUME_KERNEL”

# Documented as workaround in Metalink Note 414163.1

Run root.sh on NODE 1 and let it finish before you run root.sh on NODE 2

Run the cluvfy utility to verify all pre-requisites are in place.

I will be using the “-osdba dba and -orainv dba” switches to suppress OS group ‘oinstall’ errors, as I have not created the ‘oinstall’ group. I prefer to keep just one group ‘DBA’ for the user ‘oracle’

After you have configured the hardware and operating systems on the new nodes, you can use this command to verify node reachability. Run the CLUVFY command to verify your installation at the post hardware installation stage.

$ ./cluvfy stage -post hwos -n rklx1,rklx2

Run the following CLUVFY to check system requirements for installing Oracle Clusterware.

It verifies the following

• User Equivalence: User equivalence exists on all the specified nodes

• Node Reachability: All the specified nodes are reachable from the local node

• Node Connectivity: Connectivity exists between all the specified nodes through the public and private network interconnections

• Administrative Privileges: The oracle user has proper administrative privileges to install Oracle Clusterware on the specified nodes

• Shared Storage Accessibility: If specified, the Oracle Cluster Registry (OCR) device and voting disk are shared across all the specified nodes

• System Requirements: All system requirements are met for installing Oracle Clusterware software, including kernel version, kernel parameters, software packages, memory, swap directory space, temp directory space, and required users and groups

• Node Applications: VIP, ONS, and GSD node applications are created for all the nodes

./cluvfy stage -pre crsinst -osdba dba -orainv dba –r 10gR2 -n rklx1,rklx2

Run the following to verify that your system is prepared to create the Oracle Database with Oracle RAC successfully.

./cluvfy stage -pre dbcfg -n rklx1,rklx2 -d /u01/app/oracle/product/10.2.0

Run the following to perform a pre-installation check for an Oracle Database with Oracle RAC installation

./cluvfy stage -pre dbinst -n rklx1,rklx2 -r 10gR2 -osdba dba –verbose

Run the CLUVFY command to obtain a detailed comparison of the properties of the reference node with all of the other nodes that are part of your current cluster environment

./cluvfy comp peer -n rklx1,rklx2 -osdba dba -orainv dba

To verify the accessibility of the cluster nodes from the local node or from any other cluster node, use the component verification command nodereach as follows:

./cluvfy comp nodereach -n rklx1,rklx2

To verify that the other cluster nodes can be reached from the local node through all the available network interfaces or through specific network interfaces, use the component verification command nodecon as follows:

./cluvfy comp nodecon -n all

Verifying the Existence of Node Applications

To verify the existence of node applications, namely the virtual IP (VIP), Oracle Notification Services (ONS), and Global Service Daemon (GSD), on all the nodes, use the CVU comp nodeapp command, using the following syntax:

cluvfy comp nodeapp [ -n node_list] [-verbose]

Verifying the Integrity of Oracle Clusterware Components

To verify the existence of all the Oracle Clusterware components, use the component verification comp crs command, using the following syntax:

cluvfy comp crs [ -n node_list] [-verbose]

Verifying the Integrity of the Oracle Cluster Registry

To verify the integrity of the Oracle Cluster Registry, use the component verification comp ocr command, using the following syntax:

cluvfy comp ocr [ -n node_list] [-verbose]

Verifying the Integrity of Your Entire Cluster

To verify that all nodes in the cluster have the same view of the cluster configuration, use the component verification comp clu command, as follows:

cluvfy comp clu

How to delete everything from RAW Devices

Once the raw devices are created, use the dd command to zero out the device and

make sure no data is written to the raw devices:

# dd if=/dev/zero of=/dev/raw/raw1

# dd if=/dev/zero of=/dev/raw/raw2

Various Metalink Notes

How to map raw device on RHEL5 and OEL5 – Note 443996.1

Linux 2.6 Kernel Deprecation Of Raw Devices – Note 357492.1

How to Disable SELinux – Note 457458.1

Installation and Configuration Requirements – Note 169706.1

Configuring raw devices (singlepath) for Oracle Clusterware 10g Release 2 (10.2.0)

on RHEL5/OEL5 – Note 465001.1

How to Setup UDEV Rules for RAC OCR & Voting devices on SLES10, RHEL5, OEL5

– Note 414897.1

How to Configure Linux OS Ethernet TCP/IP Networking – Note 132044.1

How to Clean Up After a Failed Oracle Clusterware (CRS) Installation – Note 239998.1

Requirements For Installing Oracle 10gR2 On RHEL/OEL 5 (x86) – Note 419646.1

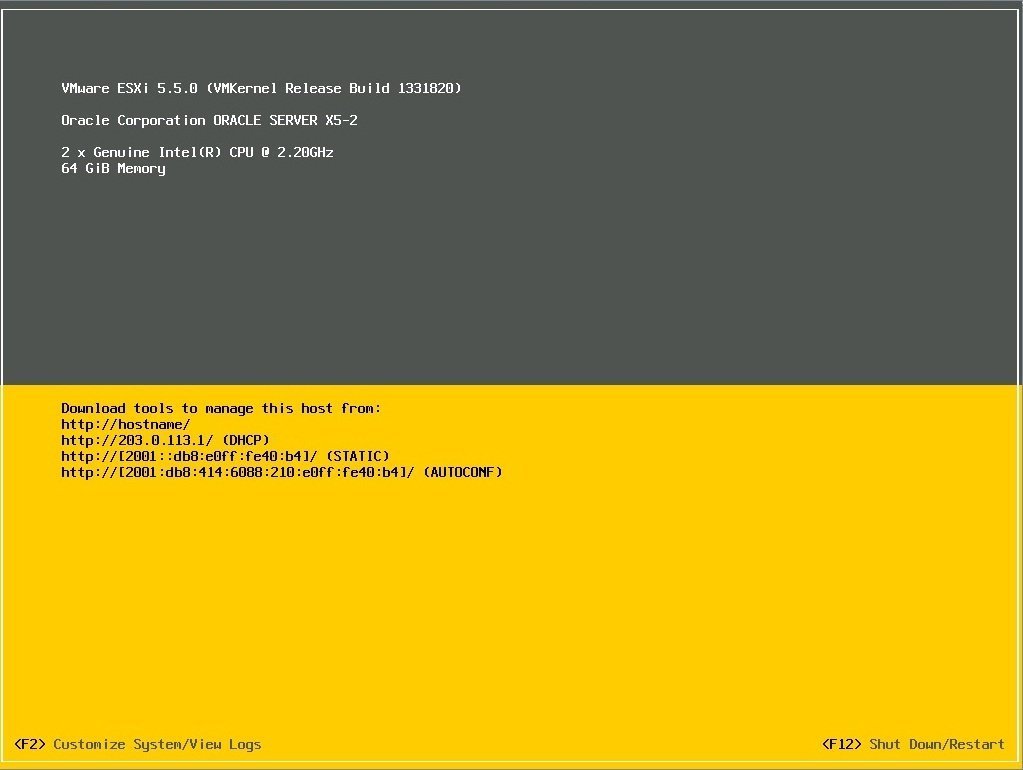

This article provides instructions on how to install a single instance Oracle Database 18c on Linux with storage on a Pure FlashArray. This objective is to provide an overview of the installation process with a focus on the steps needed to be carried out on the FlashArray.

Oracle provides two storage options for the database - File System and Automatic Storage Management (ASM in short). This document will go through the installation process for each. For detailed configuration options and instructions for installing the Oracle Database 18c, please refer to the Oracle Grid Infrastructure Installation Guide and the Oracle Database Installation Guide.

Before you begin, please go through the Oracle Database Installation Checklist and make sure all the prerequisites have been met.

Please review the recommendations provided in Oracle Database Recommended Settings for FlashArray and implement the ones that are applicable to your environment.

I. Prepare database host

1. Install prerequisite packages

1.1 Install sg3_utils. This provides useful SCSI utilities.

1.2 Oracle Universal Installer requires an X Window System so make sure X libraries are installed. Installing xclock will pull in the required dependencies and can also be used to test X Window System is installed correctly and in a working state.

For more details, please refer to Setting up X Window Display section in the Appendix.

1.3 Download and install the pre-install rpm.

Check the RPM log file to review the changes that the pre-install rpm made. On Oracle Linux 7, it can be found at /var/log/oracle-database-preinstall-18c/backup/<timestamp>/orakernel.log.

The pre-install RPM should install all the prerequisite package needed for the installation. For reference, a complete list can be found at Operating System Requirements for x86-64 Linux Platforms.

You can check if one or more packages are installed using the following command.

2. Create Users and Groups

2.1 Create operating system users and groups.

2.2 Add umask to .bash_profile of oracle and grid user

3. Add Host entries

3.1 Add entries to /etc/hosts

3.2 Add entries to /etc/hostname

Sensitech driver download for windows. 3.3 Set ORACLE_HOSTNAME in .bash_profile file.

4. Update UDEV rules

Create a rules file called /etc/udev/rules.d/99-pure-storage.rules with the settings described below so that the settings get applied after each reboot.

4.1 Change Disk I/O Scheduler

Disk I/O schedulers reorder, delay, or merge requests for disk I/O to achieve better throughput and lower latency. Linux comes with multiple disk I/O schedulers, like Deadline, Noop, Anticipatory, and Completely Fair Queuing (CFQ).

Pure Storage recommends that noop scheduler be used with FlashArrays. Here is an example of how a rule can be formulated.

4.2 Collection of entropy for the kernel random number generator

Some I/O events contribute to the entropy pool for /dev/random. Linux uses the entropy pool for things like generating SSH keys, SSL certificates or anything else crypto. Preventing the I/O device from contributing to this pool isn't going to materially impact the randomness. This parameter can be set to 0 to reduce this overhead.

4.3 Set rq_affinity

By default this value is set to 1, which means that once an I/O request has been completed by the block device, it will be sent back to the 'group' of CPUs that the request came from. This can sometimes help to improve CPU performance due to caching on the CPU, which requires less cycles.

If this value is set to 2, the block device will sent the completed request back to the actual CPU that requested it, not to the general group. If you have a beefy CPU and really want to utilize all the cores and spread the load around as much as possible then a value of 2 can provide better results.

5. HBA I/O Timeout Settings

Though the Pure Storage FlashArray is designed to service IO with consistently low latency, there are error conditions that can cause much longer latencies. It is important to ensure dependent servers and applications are tuned appropriately to ride out these error conditions without issue. By design, given the worst case for a recoverable error condition, the FlashArray will take up to 60 seconds to service an individual IO so we should set the same value at the host level. Please note that 0x3c is hexadecimal for 60.

Edit /etc/system and either add or modify (if not present) the sd setting as follows:

II. Prepare storage on the FlashArray

1. Create the volumes

As the FlashArray is built using solid-state technology, the design considerations that were very important for spinning disk based storage are no longer valid. We do not have to worry about distributing I/O over multiple disks, therefore no need of making sure that tables and its indexes are on different disks. We can place our entire database on a single volume without any performance implication. One thing to keep in mind when deciding on the number of volumes to create is that performance and data reduction statistics are captured and stored at the volume level.

In this installation, we will create 3 volumes for the database. One for data and temp files, one for redo log files and the third one for the Flash Recovery Area.

1.1 Go to Storage -> Volumes, and click on the plus sign on the Volumes section.

1.2 Create a volume for the Oracle Home

1.3 Create one or more volumes for database Data and Temp files

1.4 Create one volume for the Redo Log files.

1.5 Create one or more volumes for the Flash Recovery Area Sequans usb devices driver download for windows.

2. Create the host

2.1 Go to Storage->Hosts and click on the plus icon in the Hosts section.

2.2 The Create Host dialog will popup. Enter the name for the host that we are going to add. Note that this can be any name you think would best identify your host in the array, and does not have to be the operating system hostname. Click on Create to create a host object.

2.3 The host will now appear in the Hosts section. Click on the name to go to the Host details page.

2.4 Click on the Menu icon at the top right corner of the Host Ports section. A drop-down menu with a list of options will appear. This is where we set up the connectivity between the FlashArray and the Host. You need to choose the configuration option depending on the type of network we are using to connect.

In this case, we are connecting a Fibre Channel network, so we select the option to Configure WWNs.

For a iSCSI network, one would select the option to Configure IQNs.

For a NVME over Fabrics network, one would select the option to Configure NQNs.

For more details on how to identify the port names, please refer to Finding Host Port Names section in the Appendix.

2.5 On selecting Configure WWNs.. from the menu, I dialog similar to the one below will be displayed with a list of available WWNs in the left pane. It is not showing any available on this demo host as the WWNs have already been added to the host object.

2.6 Once the WWNs corresponding to the host are selected, they will appear in the Host Ports panel.

3. Connect the Volumes

3.1 Click on the Menu icon on the Connected Volumes panel. From the drop-down menu, select Connect...

3.2 Select the volumes that we created in Step 1 and click Connect.

3.3 The volumes will now appear under the Connected Volumes panel for the host.

3.4 Clicking on a volume name will show the details page where we can find the volume serial number.

4. Setup volumes on the host

After the volumes are created on the FlashArray and connected to the host, we need to configure them before we can start the Oracle installer.

4.1 For the volumes to show up on the host, we need to rescan the scsi bus.

4.2 Check the volumes are visible on the host using commands like lsscsi or lsblk.

4.3 Edit multipath.conf file and create aliases for the volumes.

The wwid can be obtained by prefixing 3624a9370 to the volume serial number (in lower case) obtained in step 3.4 above.

4.4 Create a filesystem on the volume for Oracle Home

4.5 Create a mount point

4.6 Add an entry to /etc/fstab

4.7 Mount the volume

III a. Install Oracle Database on File System

Configure Database Storage

As the database will be created on File System, we need to create the mount points and file system on the volumes before we can mount them.

1. Create file systems on the database volumes.

2. Create the mount points

3. Add entries to /etc/fstab

4. Mount the volumes

5. Change ownership of the mount points to Oracle owner.

Create ORACLE HOME and unzip software

1. Create directories and set the permissions

2. Unzip software as the oracle user.

Start the installer

Select “Set Up Software Only” as we will use dbca to create the database after the software installation is complete.

Select 'Single instance database installation' as we are installing a single-instance database.

Select the database edition you would like to install.

Specify the directory for ORACLE_BASE.

Here you can specify OS group for each oracle group. Accept default settings and click “Next” button.

Fix any issues identified by the prerequisite checks. You can select Ignore All checkbox to proceed if you understand the implications.

Oracle needs a confirmation that you understand the impact and would like to proceed.

The installer provides a summary of selections made. Click on Install to proceed.

Installation in progress...

root.sh script located in ORACLE_HOME needs to be installed as root user from another window. Once executed, click OK.

Software installation is successful.

Now that the database software is installed, create the database using the Database Configuration Assistant (dbca).

Select Create a database

Choose Typical or Advanced. Advanced gives more options to customize so we select that.

Select type of database you want to create.

Provide database identification details

Select database storage option. In this section, we are installing on the file system.

Specify the location of the Fast Recovery Area and enable Archiving.

Create a new Listener. We do not have any as this is a fresh install.

Select Oracle Data Vault Configuration options

Select Instance configuration options

Specify Management options. If you already have EM Cloud Control installed, you can provide the details here to bring this database under management.

Specify database credentials for administrative accounts

Select Database Creation option

Summary of selections made, if looks good, click on Finish.

Database creation in progress..

Database creation complete.

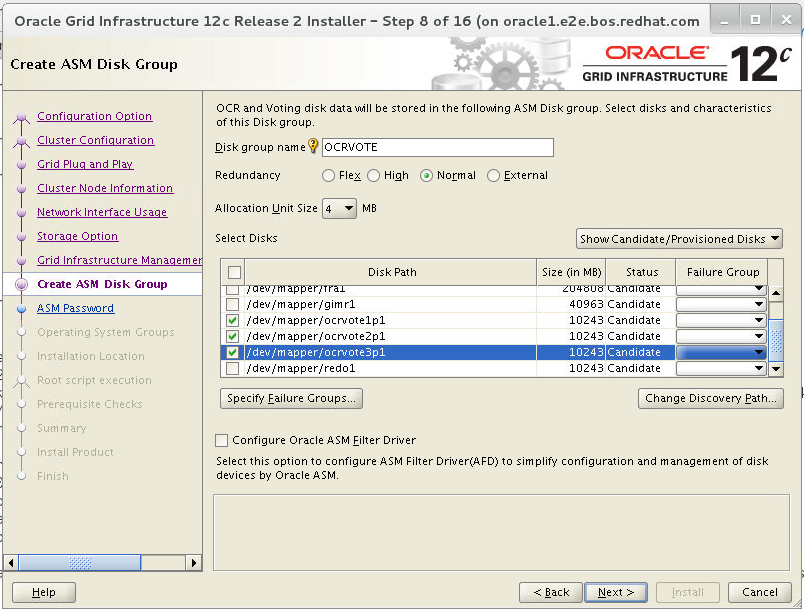

III b. Install Oracle Database on Automatic Storage Management (ASM)

Oracle ASM is installed as part of an Oracle Grid Infrastructure installation. As we are going to use Oracle ASM for storage, we first need to install Oracle Grid Infrastructure before we install and create the database.

Before we start the Grid Installation, we need to decide how we would like to make the storage available to ASM. There are three options for drivers/device persistence :

- UDEV rules

- ASMLIB

- ASM Filter Driver (AFD)

In this example installation, we will be using ASMLIB to configure storage. For more information on setting up storage using UDEV rules or ASM Filter Driver, please refer to the ASM Device Persistence options section in the Appendix.

Configure Database Storage using ASMLIB

1 Download and install ASMLib

2 Run the oracleasm initialization script with the configure option.

The script will prompt for the user and group to own the driver interface. That would be grid and asmadmin respectively. To questions on whether it should start the ASM Library driver on boot, and whether it should scan for ASM disks on boot, we need to answer Yes to both.

3 Enter the following command to load the oracleasm kernel module.

To check the status of the oracleasm driver, run the status command.

4 Now that the driver is loaded, we can go ahead and create ASM disks

To check if the asm disks have been created successfully, run the listdisks command.

To check the status of each disk returned by the above command, run the querydisks command.

For more information on Oracle ASMLib, please refer to Automatic Storage Management feature of the Oracle Database.

Create GRID HOME and unzip software

1. Create directories and set the permissions

2. Unzip software

3. Install the cluster verify utility

Start Grid Infrastructure Installer

As we are installing a single instance database, select Configure Oracle Grid Infrastructure for a Standalone Server (Oracle Restart)

Enter the name of the Disk Group for database files.

Next, click on Change Discovery Path button to change the path to where our disks can be located.

As we are using ASMLIB, we set the discovery path to /dev/oracleasm/disks.

The ASMLIB disks should now appear. Select the disks that should be part of the DATA disk group. In this example, we have only one.

Change Redundancy setting to External as the FlashArray comes with always-on RAID-HA out of the box.

Also, as we are using ASMLIB, we will leave Configure ASM Filter Driver unchecked.

On clicking Next, we will get the following popup. Click on Yes and continue.

Enter the desired passwords.

Select operating system groups.

You can run the root.sh script manually when prompted, or select the options like below to have the installer run it automatically.

Review the results of the prerequisite check. If any issues are found, it is recommended to fix them before proceeding.

If you choose to ignore some of the issues found by the checks as we have done above, the installer will show the following warning.

Review the summary of inputs and settings, and click on Install to proceed.

Once the installer completes, the Grid Infrastructure would be installed and the ASM instance would be up and running. This can be verified by running the asmcmd command.

Install Oracle Database

1. Create directories and set the permissions

2. Unzip software as the oracle user.

3. Start the installer

Select the disk group for the database files. DATA is the disk group we created while installing the Grid Infrastructure.

Enter the desired passwords

Select OS group for each privilege.

Prerequisite checks are performed. Fix issues identified before proceeding. Ignore only if you know what you are doing and understand the implications.

Summary of inputs and options selected

Installation in progress

Installation complete!

The database should be up and running now. If you have not done so already, you may want to update the .bash_profile script for the grid and oracle users, and set the environment variables like below.

Before releasing the database to the users, please make sure that the recommendations provided in Oracle Database Recommended Settings for FlashArray have been applied.

Appendix

1. Finding Host Port Names

Host Port Names are needed for connecting the Host to the Storage Array. The terminology as well as the command to find the Port Name depends on the type of the network.

1.1 Fibre Channel

All FC devices have a unique identity that is called the Worldwide Name or WWN. Find the WWNs for the host HBA ports

If systool is installed, run the following command (systool is part of sysfsutils package and can be easily installed using yum).

Else run the following command.

1.2 iSCSI

In case of iSCSI, the port name is called iSCSI Qualified Name or IQN. If iSCSI has been setup on a host, the IQN is stored in /etc/iscsi/initiatorname.iscsi.

1.3 NVME over Fabrics

In case of NVME-oF, the port name is called NVME Qualified Name or NQN. If NVME-oF has been setup on a host, the NQN is stored in /etc/nvme/hostnqn.

2. ASM Device Persistence options

2.1 Oracle ASM Filter Driver (ASMFD)

Oracle ASM Filter Driver is a new option introduced in Oracle 18c. It rejects write I/O requests that are not issued by Oracle software. This write filter helps to prevent users with administrative privileges from inadvertently overwriting Oracle ASM disks, thus preventing corruption in Oracle ASM disks and files within the disk group.

Oracle ASMFD simplifies the configuration and management of disk devices by eliminating the need to rebind disk devices used with Oracle ASM each time the system is restarted.

After Grid Software is unzipped and before the Grid Installer is started, disks need to be configured for use with ASMFD.

a. Login as root and set ORACLE_HOME and ORACLE_BASE

Download Oracle Scsi & Raid Devices Driver Win 7

We set ORACLE_BASE to a temporary location to create temporary and diagnostic files.

b. Use the ASMCMD afd_label command to provision disk devices for use with Oracle ASM Filter Driver.

c. Use the ASMCMD afd_lslbl command to verify the device has been marked for use with Oracle ASMFD. For example:

d. Unset ORACLE_BASE

e. Change permission on the devices

After the ASMFD disks are labeled, the installation process is the same as ASMLIB process detailed above, except for a change in the following screen.

Firstly, the disk discovery string needs to be changed to AFD:*.

Secondly, the Configure Oracle ASM Filter Driver checkbox highlighted below needs to be selected.

On Linux, if you want to use Oracle ASM Filter Driver (Oracle ASMFD) to manage your Oracle ASM disk devices, then you must deinstall Oracle ASM library driver (Oracle ASMLIB) before you start the Oracle Grid Infrastructure installation as the two are not compatible.

2.2 Oracle ASMLIB

Oracle ASMLIB maintains permissions and disk labels that are persistent on the storage device, so that the label is available even after an operating system upgrade.

The Oracle Automatic Storage Management library driver simplifies the configuration and management of block disk devices by eliminating the need to rebind block disk devices used with Oracle Automatic Storage Management (Oracle ASM) each time the system is restarted.

With Oracle ASMLIB, you define the range of disks you want to have made available as Oracle ASM disks. Oracle ASMLIB maintains permissions and disk labels that are persistent on the storage device, so that the label is available even after an operating system upgrade.

The detailed steps for setting up ASMLIB have been provided in the main section above.

2.3 UDEV Rules

Device persistence can be configured manually for Oracle ASM using UDEV rules.

For more details, please refer to Configuring Device Persistence Manually for Oracle ASM.

3. Setting up X Window Display

Install the xclock program to test if the X windowing system is properly installed and working. The yum installer will pull in the required dependencies.

After installing xclock, run it on the command line first as root user and then as oracle and grid (if applicable) user.

If a small window pops with a clock display, that confirms that X Window System is working properly and it is OK to run the Oracle Installer.

Please note that you will need an X Window System terminal emulator to start an X Window System session.

Download Oracle Scsi & Raid Devices Drivers

One of the common issues on linux is that xclock works fine when executed from the root user, but errors out with Error: Can't open display: when executed from any other user.

To fix this issue, perform the following steps.

1. Login as the root user.

a. Run the xauth list $DISPLAY command and make a note of the output.

b. Make a note of the DISPLAY setting for the root user

2. Now login as the non-root user for which xclock is not working.

a. Run the xauth add command with the value returned

b. Set the DISPLAY variable to the same setting as root.

xclock should work now from non-root user as well.